Local government leaders are increasingly talking about data sharing and democratisation as essential for better public services. Even under budget pressure, councils are starting to consider longer-term planning because it enables investment in early intervention and prevention approaches that are impossible without cross-organisational data visibility.

2026 DATA TRENDS FOR THE UK PUBLIC SECTOR

What is shaping the UK Public Sector?

The UK public sector enters 2026 facing unprecedented complexity. AI adoption is accelerating. New legislation is reshaping how data is accessed and shared. Public expectations for transparency are rising, while financial and workforce pressures are intensifying across central government, local government, health, and education.

Amid all of this, one factor is repeatedly determining whether transformation succeeds: data readiness. Not the number of dashboards a department can build, or how ambitious its AI strategy sounds, but whether its data is accessible, governed, interoperable, and usable by the people who need it.

This report brings together the most important data trends shaping the UK public sector in 2026, grounded in recent government reviews, policy direction and workforce research and strengthened by “voice of the field” insights from Snowflake’s public sector teams working daily with UK organisations. Those insights add valuable context: what decision-makers are asking for, what barriers continue to stall progress, and which messages genuinely resonate in real conversations.

Across every subsector, a consistent pattern emerges: leaders want AI and automation, but what they often need first is better data foundations, faster time-to-value, and solutions that don’t depend on scarce specialist skills.

What we’re hearing across the public sector right now

Across local government, central government, and the NHS, organisations are under pressure to deliver more with less. Budget constraints are forcing tough choices, but they are also driving a shift from short-term fixes toward more strategic planning where a credible multi-year case for modernisation can be made.

In local and regional government, we are seeing renewed momentum in data and AI as a lever for improved service delivery, particularly as authorities prepare for reorganisation and seek to reduce technical debt that prevents meaningful change. There is also growing focus on adjacent markets such as housing associations, where legacy constraints and resource shortages make “lightweight” modernisation approaches attractive.

In central government, data sharing and collaboration are becoming decisive differentiators. Initiatives such as data libraries and marketplace approaches reflect a desire to unlock wider economic and social value from government datasets, while procurement pressures are also increasing the importance of open standards and reducing vendor lock-in.

In healthcare, significant organisational change and workforce pressure are pushing the system toward more integrated, interoperable approaches. At ICB level, the focus often centres on population health, inequality and data sharing, while at Trust level the conversation often turns quickly to ROI and cost reduction through modernising legacy data estates.

£45bn estimated annual unrealised savings opportunity through digitisation/modernisation across the UK Public Sector

Cross-Agency Data Sharing Becomes a Core Requirement

For many years, cross-government data sharing was more aspiration than reality. In 2026, that gap is closing. Legislative and strategic direction has made it clear that public servants must be able to access data across services securely and effectively, because the outcomes government is accountable for cannot be delivered within organisational boundaries.

Cross-agency data sharing is now central to joined-up public services: the ability to coordinate health and social care, respond to safeguarding risks, manage housing demand, prevent fraud, and deliver early intervention

When data is trapped inside a single department or system, organisations default to reactive delivery, arriving late, spending more, and treating symptoms rather than preventing problems.

This shift is not simply technical. Effective sharing requires shared governance standards, clear permission models, consistent data definitions, and architectures designed for secure collaboration rather than brittle point-to-point integrations.

.png)

Central government conversations increasingly centre on data sharing as an asset strategy with organisations exploring how to make government datasets reusable across arm’s length bodies and even wider sectors. This is accelerating interest in marketplace approaches and mature collaboration capabilities, rather than each department building its own isolated platform.

Protecting vulnerable young people starts with better data.

In this video, see how a multi-agency youth justice service partnered with Catalyst BI to transform fragmented, manual data into a single, secure Snowflake platform. By automating data from youth services, police, health, and community partners, teams gained real-time visibility into risk indicators and emerging hotspots.

The result? Four hours saved every day, faster insights, and earlier, more targeted interventions that strengthen safeguarding and collaboration across the service.

Solution Brief: Data Collaboration for Federal Agencies

Learn how federal agencies can unite their siloed data, easily discover and securely share governed data, and execute diverse analytic workloads.- Safeguarding: faster risk visibility across social care, schools, police, and health partners

- Hospital discharge / delayed transfers: joined-up view of capacity, care packages, and patient needs

- Housing + prevention: linking arrears, risk signals and support pathways to reduce evictions

- Fraud & error reduction: cross-checking benefits, council tax, tenancy and other datasets

- Population health: linking health + social determinants to target interventions

- Data sharing governed by clear principles and auditable access controls

- Common definitions and data standards (so “the same metric” means the same thing)

- Trusted, reusable datasets (“data products”) rather than one-off exports

- A secure platform for governed sharing (fine-grained access, audit, collaboration)

- A workshop-led approach to define the right data-sharing model and build a pragmatic roadmap

- Delivery accelerators that prioritise real outcomes (not just architecture)

Book a Data Discovery Workshop

Don’t wait for challenges to escalate. Book a Data Strategy Workshop to identify opportunities and tailor solutions to your unique needs.Cloud Modernisation Becomes the Only Sustainable Path Forward

Trust has become a defining theme of public sector transformation. As organisations increase data sharing and adopt AI, citizens are more aware of and more concerned about how their data is used. Trust is shaped by transparency, accountability, fairness, and whether organisations can demonstrate robust safeguards.

At the same time, governance challenges inside organisations remain significant. Across the public sector, governance maturity varies widely: some teams have clear stewardship roles, metadata practices and access models, while others still rely on informal approaches that do not scale.

That inconsistency creates operational inefficiency and increases risk, especially as data sharing expands.

Governance in 2026 is no longer “a compliance exercise.” It is a strategic capability that enables data to be shared safely, used responsibly, and trusted externally. It is also increasingly visible: as transparency requirements grow, organisations must show not just that they have controls, but that they can explain how and why decisions are made.

.png)

In councils, governance and access control are increasingly decisive because so much data is highly sensitive; social care, safeguarding, housing and special educational needs. Leaders want confidence that strict role-based and attribute-based access controls can be applied across a unified environment without increasing risk.

Latest Public Sector news, trends and updates

47% of central government and 45% of NHS services still lack a digital pathway, and very few services avoid manual processing altogether.

- Sensitive-data access control: social care, safeguarding, housing, mental health notes

- Auditability: answering “who accessed what, when, and why?”

- Data quality improvement: reducing duplicate records, inconsistencies, missing fields

- Transparency-by-design: ability to explain data use and automated decisions

- Trusted reporting: one version of the truth for performance, finance, and outcomes

- Clear ownership: stewards, custodians, product owners

- Consistent metadata, lineage and documentation

- Role/attribute-based access and policy-driven controls

- A published “trust model” for using data and AI responsible

- Platform capabilities: governance, auditing, access control, lineage foundations

- Operating model design: who owns what, how decisions get made

- Data product approach: trusted datasets that are reusable and measurable

Book a Data Discovery Workshop

Don’t wait for challenges to escalate. Book a Data Strategy Workshop to identify opportunities and tailor solutions to your unique needs.Data Governance and Public Trust Move to the Forefront

Public sector organisations are under rising fiscal pressure and scrutiny. Technology spend is high, and the operational burden of legacy infrastructure continues to drain capacity and budgets that could otherwise fund service improvements.

Modernisation is now less about innovation theatre and more about resilience and sustainability. Organisations need platforms that reduce duplication, improve cost visibility, and enable them to scale without expensive re-engineering.

Cloud-native approaches can offer consumption-based economics, faster iteration and reduced infrastructure overhead but only if implemented with strong governance and realistic adoption planning.

Modernising data platforms is particularly important because data underpins everything from operational reporting to service delivery analytics to AI readiness. Without modern data foundations, the public sector cannot deliver efficiency at scale.

.png)

Local government reorganisation is increasing appetite for platforms that can survive structural change and deliver value quickly. Housing associations are also a growth area organisations with significant technical debt and a strong desire to use data and AI for efficiency, but without capacity to build large teams.

47% central government services and 45% NHS services lacking a digital pathway.

- Modernising legacy data warehouses to reduce costs and outages

- Consolidating multiple reporting platforms into one governed environment

- Cost-to-serve analytics: seeing true costs by service, pathway, or department

- Automation: reduce manual data prep and reconciliation

- Faster reporting cycles: from monthly retrospective to near-real-time insight

- Consumption-based cost transparency and proactive cost governance

- Fewer duplicated pipelines and data stores

- A measurable reduction in manual reporting and infrastructure overhead

- A platform ready for analytics and AI without major rework

- A platform approach designed for scalable analytics + cost governance

- Migration and consolidation patterns that reduce risk

- A workshop to define the roadmap and business case aligned to Public Sector constraints

Book a Data Strategy Workshop

Don’t wait for challenges to escalate. Book a Data Strategy Workshop to identify opportunities and tailor solutions to your unique needs.AI Readiness Depends on Data Quality. Not Algorithms

AI has become a dominant theme in public sector conversations. However, many decision-makers struggle to articulate specific AI goals. Often what they are really asking for is better data: more accessible, more reliable, and better governed because without that, AI will either fail or introduce unacceptable risk.

AI systems amplify whatever they are fed. If the underlying data is incomplete, biased, inconsistent or fragmented, AI outputs will be too.

This is especially problematic in public services, where poor decisions affect real lives.

True AI readiness therefore starts with data readiness: data quality, interoperability, structured and unstructured data support, governance, and the ability to monitor and audit outcomes. Organisations that invest in these foundations will be able to move quickly and safely into AI use cases. Organisations that do not will either stall or risk serious harm.

.png)

Only 26% of governments have deployed analytics/AI partially or fully

- Demand forecasting: A&E, crisis response, contact centres, housing services

- Risk stratification: identifying vulnerable cohorts for early intervention

- Operational automation: triaging referrals, routing cases, prioritising queues

- Unstructured data insight: extracting themes from documents and notes

- Service performance optimisation: identifying bottlenecks and variation

- Defined “AI-ready datasets” with quality rules, lineage, and owners

- Human-in-the-loop oversight and clear evaluation metrics

- The ability to explain outputs and monitor drift/impact

- Structured + unstructured data foundations in one governed environment

- Practical AI readiness workshops (use-case led, risk-aware)

- Delivery accelerators that turn “AI ambition” into measured pilots

Book a Data Discovery Workshop

Don’t wait for challenges to escalate. Book a Data Strategy Workshop to identify opportunities and tailor solutions to your unique needs.Workforce Capability Becomes the Greatest Barrier to Progress

Even when organisations have the right technology plans, capability often limits progress. Digital, data and analytical skills shortages remain persistent across the public sector. Teams are stretched, recruitment is difficult, and turnover in specialist roles reduces continuity.

This creates a transformation gap: organisations invest in tools but cannot fully adopt them. It also shapes platform decisions. Increasingly, public bodies value solutions that are quicker to implement, require fewer specialist engineers, and support familiar skills such as SQL and Python.

Workforce challenges are especially acute where major organisational restructuring is underway, or where procurement and analytical skills are under pressure. Without a deliberate capability strategy data literacy, training pathways, governance roles, and a realistic operating model, technology investments will under-deliver.

.png)

58% of Public Sector organisations cite skills shortages/talent gaps as a top barrier to digital transformation

- Self-service analytics for operational teams without ticket queues

- Reusable pipelines and templates that reduce reliance on specialists

- Automation of routine reporting to free scarce analyst capacity

- Standard dashboards for executive visibility and performance

- Training pathways for data literacy across non-technical roles

- Clear roles and responsibilities (product owners, stewards, platform team)

- Less dependency on niche specialist skills

- More time spent on insight and improvement, less on wrangling data

- Platform usability that supports SQL/Python familiarity

- Delivery patterns that reduce engineering overhead

- Training + enablement as part of the implementation, not an afterthought

Book a Data Discovery Workshop

Don’t wait for challenges to escalate. Book a Data Strategy Workshop to identify opportunities and tailor solutions to your unique needs.Data Quality and Interoperability Become Essential for Real-Time Services

The ability to access and integrate high-quality data is now foundational to the public sector’s operational effectiveness. Yet the State of Digital Government Review highlights striking gaps: only 27% of organisations believe they have a meaningful operational view of their services, and 70% say their data landscape is fragmented and not interoperable.

This fragmentation has direct consequences. It slows decision making, undermines performance reporting, increases risk and prevents real-time insight. For AI initiatives, poor interoperability leads to inconsistent model inputs and unreliable outputs.

The Department for Education’s work in enabling real-time attendance monitoring illustrates the transformative impact of solving interoperability challenges. By using supplier-held data to bypass incompatible school systems, the department achieved visibility that was previously impossible.

Improving interoperability is not merely a technical exercise; it requires consistent standards, governance, and the ability to integrate structured and unstructured data into a coherent whole. When this is achieved, the benefits can be transformational, enabling real-time visibility and cross-system insight.

.png)

70% of Public Sector Organisations say their data landscape is not coordinated/interoperable and lacks a unified source of truth

- Operational command centres: beds, workforce, demand, backlogs

- Unified performance reporting across departments and agencies

- Case management insight: joining data across pathways and providers

- Data quality monitoring: detect breaks, missing values, drift

- Single source of truth for finance + operations + outcomes

- A unified layer of trusted datasets with common definitions

- Data quality rules monitored continuously

- Integration patterns that allow new sources to be added quickly

- Unified, governed platform layer for consistent datasets

- Interoperability roadmaps (standards + operating model + architecture)

- Quick-win dashboards that prove the value of a single source of truth

Book a Data Strategy Workshop

Don’t wait for challenges to escalate. Book a Data Strategy Workshop to identify opportunities and tailor solutions to your unique needs.Legacy Systems and Technical Debt Continue to Constrain Transformation

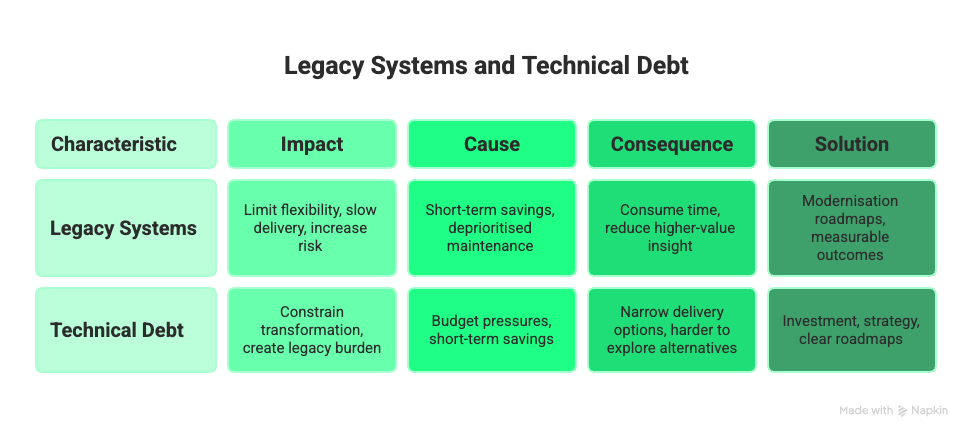

Technical debt remains one of the most persistent barriers to progress in the public sector. Budget pressures often push organisations toward short-term savings rather than long-term investment, and spending cycles can deprioritise service maintenance and improvement, creating a compounding legacy burden.

Legacy systems limit flexibility, slow delivery, and increase operational risk. They also consume time: analysts and engineers spend energy maintaining pipelines, reconciling data discrepancies, and managing fragile dependencies rather than delivering higher-value insight.

A further systemic challenge is that digital expertise is not always involved early enough in policy and investment decisions, narrowing delivery options prematurely and making it harder to explore practical alternatives.

Breaking the cycle requires both investment and strategy: clear modernisation roadmaps, measurable outcomes that support “value for money” cases, and technology choices that reduce ongoing overhead rather than adding new complexity.

- Modernise the legacy warehouse (reduce failures, speed up reporting)

- Consolidate fragmented marts into reusable data products

- Automate pipelines to reduce operational overhead

- Replace manual reconciliation with consistent datasets

- Better investment cases using outcome metrics (value for money)

- Fewer system dependencies and fewer “hand-built” pipelines

- Reduced time spent maintaining reports and fixing failures

- Clear metrics showing value and resilience improvements

- Low-barrier entry models that prove value quickly

- Secure platform governance for sensitive data domains

- Migration strategies aligned to public-sector constraints

Book a Data Discovery Workshop

Don’t wait for challenges to escalate. Book a Data Strategy Workshop to identify opportunities and tailor solutions to your unique needs.Citizens Expect Ethical, Transparent and Accountable Data Use

As AI and automated decision-making become more common, citizens increasingly expect transparency, fairness and accountability from public services. Ethics is no longer an abstract principle; it is being operationalised through standards, documentation expectations and governance requirements.

In practical terms, this means organisations must understand their data pipelines, control access to sensitive data, document how automated decisions are made, and provide mechanisms for challenge and review.

Ethical service delivery depends on trustworthy data foundations.

Ethical considerations are especially prominent in areas where decisions directly affect individuals’ wellbeing: social care, housing allocation, safeguarding, eligibility, health interventions, and educational support. In these contexts, poor governance and opaque decision-making can create real harm and significant reputational damage.

.png)

26% of the public believe government uses AI responsibly (showing the trust gap that must be addressed as AI expands)

- Algorithm transparency records for automated decisions

- Bias monitoring in models and datasets

- Explainable eligibility or prioritisation decisions

- Consent and access auditing across sensitive domains

- Outcome monitoring: do interventions reduce inequality and harm?

- Documented models, data lineage, and governance controls

- Transparent decision pathways and appeal mechanisms

- Strong oversight with citizen/service-user impact monitoring

- Governance, auditing and controlled access foundations

- Practical frameworks for transparency and ethical adoption

- Data foundations that reduce bias and improve quality

Cross-cutting theme: Time-to-Value, Skills-Light Delivery & Open Standards (market signal)

A powerful theme emerging across public sector conversations is the demand for faster, lower-barrier progress. Organisations need tangible outcomes in weeks and months, not years. This is driven by budget constraints, workforce shortages, and the urgency to deliver visible improvements.

At the same time, procurement trends increasingly favour open standards and approaches that reduce lock-in. This is partly about accountability and partly practical: enabling organisations to query and reuse data across tools and platforms.

Together, these pressures are shaping a market where time-to-value, governance, skills-light adoption and open standards increasingly define what “good” looks like.

Cross-cutting recap

- Time-to-value is becoming a procurement and delivery requirement.

- Skills shortages elevate simplicity as a strategic differentiator.

- Open standards are rising as a guardrail against lock-in.

- Starter packs/bundles help prove value and reduce adoption risk.

2026 Must Be the Year of Data Readiness

Across all trends and subsectors, one message repeats: AI, automation and digital transformation cannot succeed without strong data foundations.

The trends in this report are not a list of problems, they’re a map of where the public sector is heading. The organisations that succeed in 2026 won’t be the ones with the most ambitious AI slogans. They will be the ones who build trusted data foundations, choose pragmatic use cases, deliver value quickly, and create operating models that work in the real world of constrained budgets and scarce skills.

Data readiness is now at the centre of public sector performance.

This is why 2026 must be the year public bodies prioritise data readiness.

Catalyst BI’s Public Sector Data Strategy Workshop

To help organisations navigate these challenges, Catalyst BI offers a Data Strategy Workshop tailored to UK public-sector needs.

The workshop provides:

- A practical data maturity assessment

- Governance and interoperability diagnostics

- A clear roadmap for modernisation

- AI readiness evaluation

- A business-case aligned with government spending frameworks

This is the ideal starting point for public bodies who need a realistic, actionable plan to improve data readiness and unlock the potential of AI.

Prefer to speak to us directly?

Contact us at +44 7876 133 996 or email bi@catalyst-it.co.uk.